Table of Contents

Deploying Hadoop Cluster On Cubieboard Guide

Abstract

Hadoop is a free, Java-based programming framework that supports the processing of large data sets in a distributed computing environment. It is part of the Apache project sponsored by the Apache Software Foundation.You can use A10 Cubieboards to build Hadoop cluster base on Lubuntu(v1.04) image which was integrated jdk-1.8 armhf.Need to do configuration: JDK environment, slaves and master ssh sever, hadoop configuration parameters.

Just start

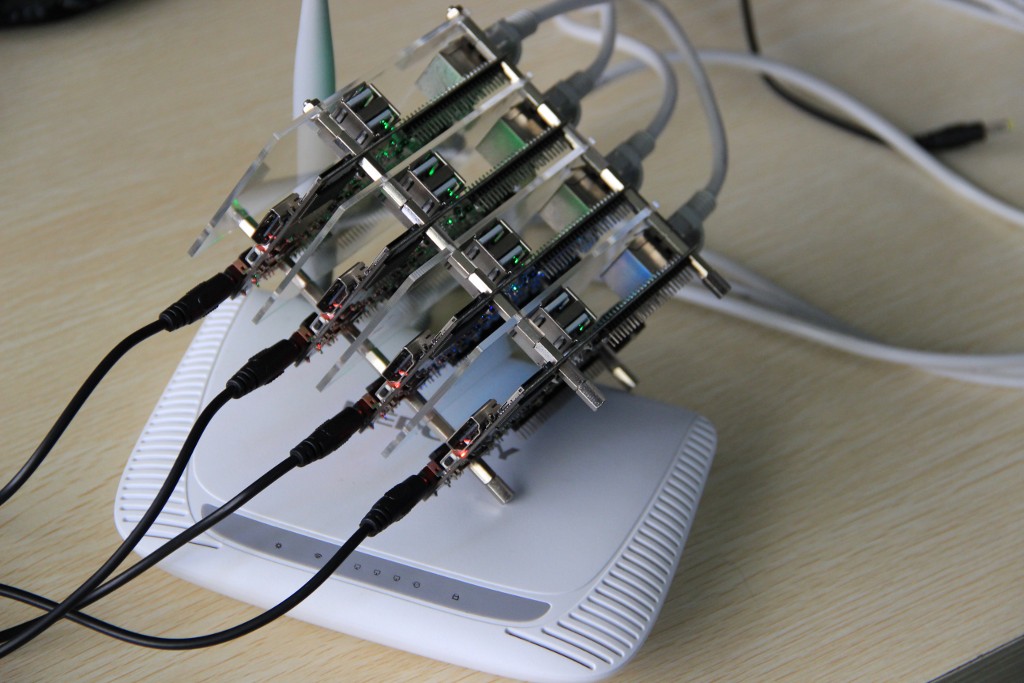

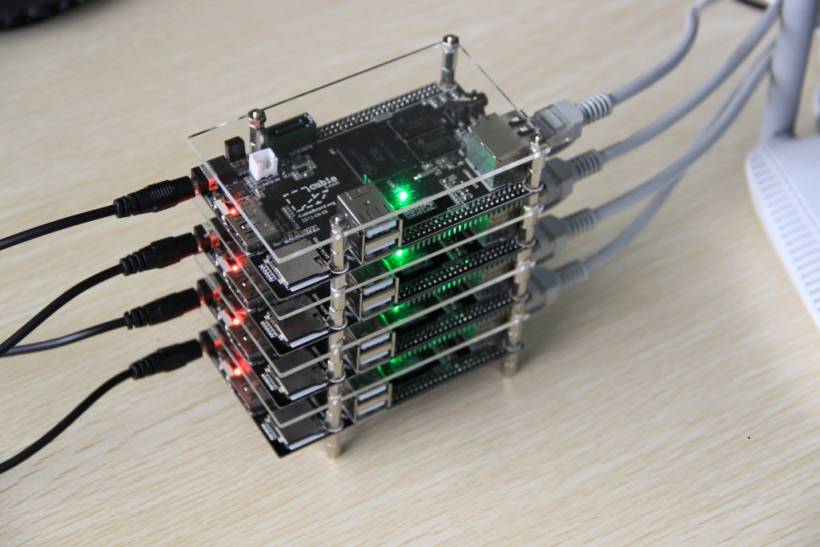

Router provide cluster with a lan

hadoop_0.20.203 you can get it from here http://hadoop.apache.org/common/releases.html

Lubuntu 12.04v1.04(JDK-1.8) 、Power and network cables

Users and network

1 master 3slaves both work in wlan network ,LAN connection between the nodes can ping each other.

For master

Create a user

sudo addgroup hadoop sudo adduser -ingroup hadoop hadoop sudo vim ./etc/sudoers add:hadoop ALL=(ALL:ALL) ALL

Host&hostname

sudo vim ./etc/hosts add: 192.168.1.40 master 192.168.1.41 slave1 192.168.1.42 slave2 192.168.1.43 slave3 sudo vim ./etc/hostname cubieboard -->master

For each slaves

You should do the same things.E.for slave1 node .

add user

sudo addgroup hadoop sudo adduser -ingroup hadoop hadoop sudo vim ./etc/sudoers add:hadoop ALL=(ALL:ALL) ALL

Host&hostname

sudo vim ./etc/hosts add: 192.168.1.40 master 192.168.1.41 slave1 sudo vim ./etc/hostname cubieboard -->slave1

Static IP settings

For each node

sudo vim ./etc/network/interfaces add #auto lo # iface lo inet loopback #iface lo eth0 dhcp auto eth0 iface eth0 inet static address 192.168.1.40 gateway 192.168.1.1 netmask 255.255.255.0 network 192.168.1.0 broadcast 192.168.1.255 sudo vim ./etc/resolvconf/resolv.conf.d/base add this: nameserver 192.168.1.1

Stay here to sure all cbs have an user with Static IP which can ping each other.

SSH sever

master<no passwd>slave1

master<no passwd>slave2

master<no passwd>slave3

B —no passwd–>A

A

ssh-keygen –t rsa –P '' cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys chmod 600 ~/.ssh/authorized_keys ssh localhost scp ~/.ssh/id_rsa.pub hadoop@192.168.1.40:~/

B

mkdir ~/.ssh chmod 700 ~/.ssh cat ~/id_rsa.pub >> ~/.ssh/authorized_keys chmod 600 ~/.ssh/authorized_keys rm –r ~/id_rsa.pub

master to slave1

hadoop@master:~$ hadoop@master:~$ ssh slave1 Welcome to Linaro 13.04 (GNU/Linux 3.4.43+ armv7l) * Documentation: https://wiki.linaro.org/ Last login: Thu Oct 17 03:38:36 2013 from master

slave1 to master

hadoop@slave1:~$ ssh master Welcome to Linaro 13.04 (GNU/Linux 3.4.43+ armv7l) * Documentation: https://wiki.linaro.org/ Last login: Thu Oct 17 03:38:58 2013 from slave1

JDK

JDK path modification

hadoop@master:~$ vim /etc/profile

#export JAVA_HOME=/lib/jdk

You also should add to other nodes.

Hadoop configuration

You should edit core-site.xml hdfs-site.xml mapred-site.xml on /hadoop/hadoop_0.20.203_master/conf for master You can do Hadoop configuration on your host computer .

aaron@cubietech:/work$ sudo mv hadoop_0.20.203 hadoop aaron@cubietech:/work$ cd hadoop/conf/ aaron@cubietech:/work/hadoop/conf$ sudo vim core-site.xml

core-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>fs.default.name</name> <value>hdfs://master:9000</value> </property> </configuration>

hdfs-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.name.dir</name> <value>/usr/local/hadoop/datalog1,/usr/local/hadoop/datalog2</value> </property> <property> <name>dfs.data.dir</name> <value>/usr/local/hadoop/data1,/usr/local/hadoop/data2</value> </property> <property> <name>dfs.replication</name> <value>2</value> </property> </configuration>

mapred-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>mapred.job.tracker</name> <value>master:9001</value> </property> </configuration>

After that ,You should copy hadoop to every node

scp -r hadoop root@192.168.1.40:/usr/local scp -r hadoop root@192.168.1.41:/usr/local scp -r hadoop root@192.168.1.42:/usr/local scp -r hadoop root@192.168.1.43:/usr/local

How to run

hadoop@master:~$ cd /usr/local/hadoop/

format filesys

bin/hadoop namenode -format

hadoop@master:/usr/local/hadoop$ bin/hadoop namenode -format 13/10/17 05:49:16 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = master/192.168.1.40 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 0.20.203.0 STARTUP_MSG: build = http://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.20-security-203 -r 1099333; compiled by 'oom' on Wed May 4 07:57:50 PDT 2011 ************************************************************/ Re-format filesystem in /usr/local/hadoop/datalog1 ? (Y or N) Y Re-format filesystem in /usr/local/hadoop/datalog2 ? (Y or N) Y 13/10/17 05:49:22 INFO util.GSet: VM type = 32-bit 13/10/17 05:49:22 INFO util.GSet: 2% max memory = 19.335 MB 13/10/17 05:49:22 INFO util.GSet: capacity = 2^22 = 4194304 entries 13/10/17 05:49:22 INFO util.GSet: recommended=4194304, actual=4194304 13/10/17 05:49:24 INFO namenode.FSNamesystem: fsOwner=hadoop 13/10/17 05:49:24 INFO namenode.FSNamesystem: supergroup=supergroup 13/10/17 05:49:24 INFO namenode.FSNamesystem: isPermissionEnabled=true 13/10/17 05:49:24 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100 13/10/17 05:49:24 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s) 13/10/17 05:49:24 INFO namenode.NameNode: Caching file names occuring more than 10 times 13/10/17 05:49:26 INFO common.Storage: Image file of size 112 saved in 0 seconds. 13/10/17 05:49:26 INFO common.Storage: Storage directory /usr/local/hadoop/datalog1 has been successfully formatted. 13/10/17 05:49:26 INFO common.Storage: Image file of size 112 saved in 0 seconds. 13/10/17 05:49:27 INFO common.Storage: Storage directory /usr/local/hadoop/datalog2 has been successfully formatted. 13/10/17 05:49:27 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at master/192.168.1.40 ************************************************************/

bin/hadoop dfsadmin -report bin/start-all.sh // start bin/stop-all.sh // stop

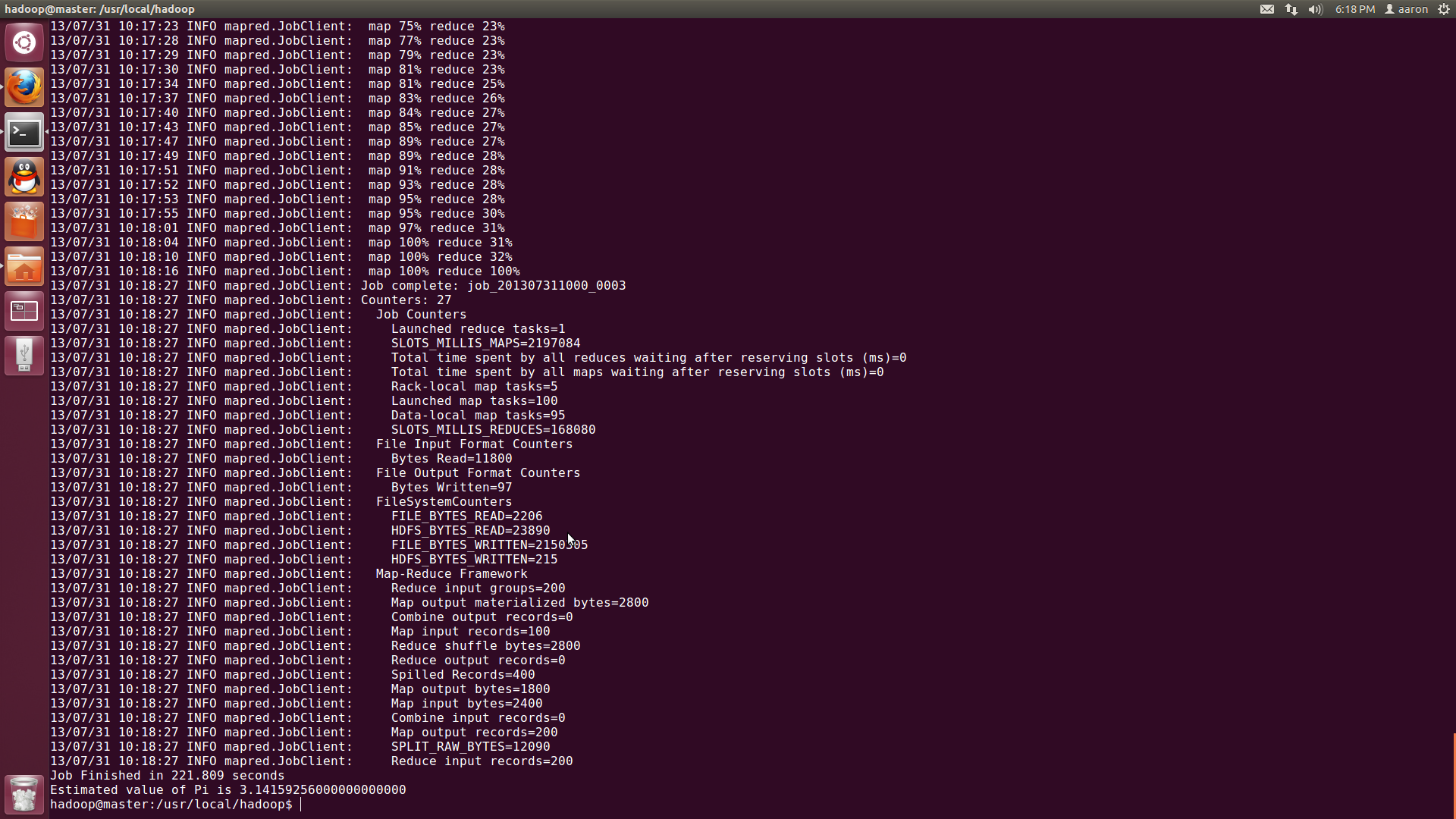

./bin/hadoop jar hadoop-examples-0.20.203.0.jar pi 100 100

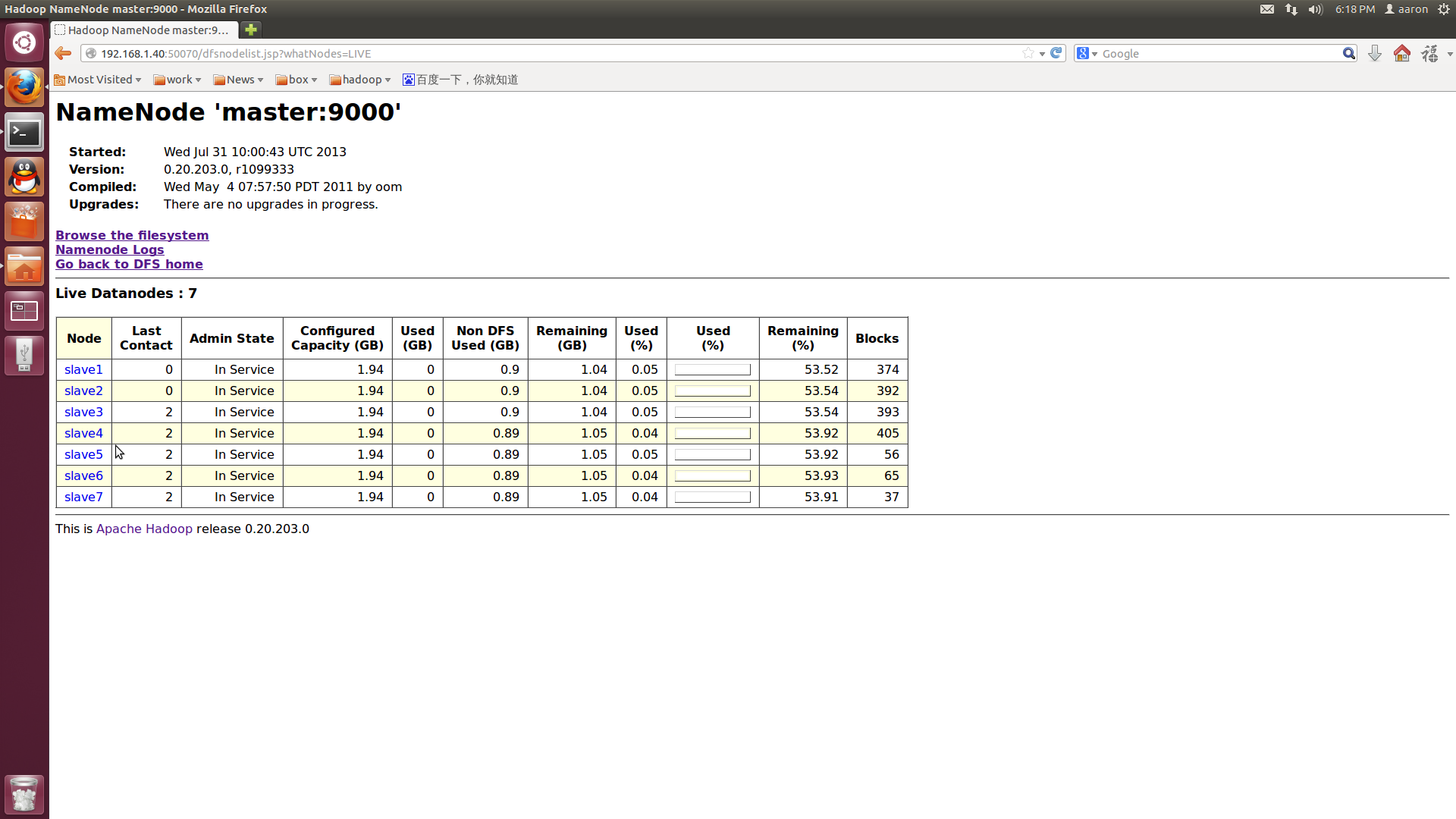

You can also see filesys on web

http:192.168.1.2:50030

http:192.168.1.2:50070

You can also read this tutorial

http://www.cnblogs.com/xia520pi/archive/2012/05/16/2503949.html