User Tools

Sidebar

This is an old revision of the document!

Table of Contents

Deploying Hadoop Cluster On Cubieboard Guide

About this Article

- Author: Cubieboard Documentations — aaron@cubietech.com — 2013/10/15 09:22

- Copyrights: CC Attribution-Share Alike 3.0 Unported

Abstract

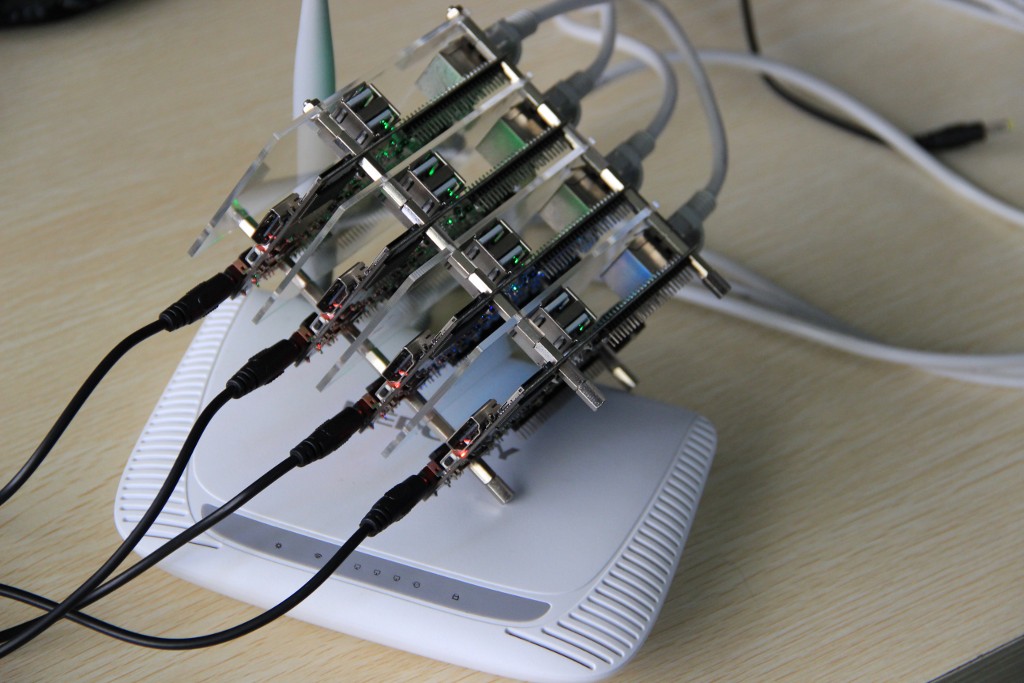

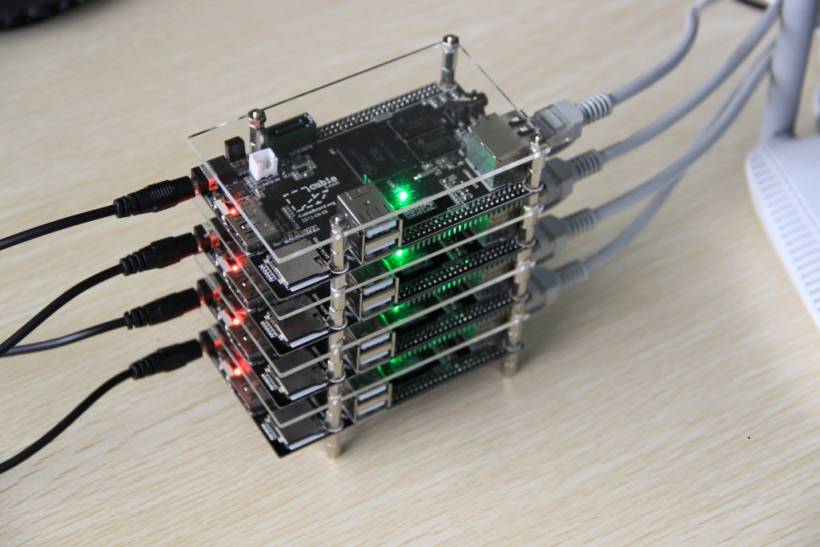

Hadoop is a free, Java-based programming framework that supports the processing of large data sets in a distributed computing environment. It is part of the Apache project sponsored by the Apache Software Foundation.You can use A10 Cubieboards to build Hadoop cluster base on Lubuntu(v1.04) image which was integrated jdk-1.8 armhf.Need to do configuration: JDK environment, slaves and master ssh sever, hadoop configuration parameters.

Just start

Users and network

1 master 3slaves both work in wlan network ,LAN connection between the nodes can ping each other.

For master

Create a user

sudo addgroup hadoop sudo adduser -ingroup hadoop hadoop sudo vim ./etc/sudoers add:hadoop ALL=(ALL:ALL) ALL

Host&hostname

sudo vim ./etc/hosts add: 192.168.1.40 master 192.168.1.41 slave1 192.168.1.42 slave2 192.168.1.43 slave3 sudo vim ./etc/hostname cubieboard -->master

For each slaves

You should do the same things. E.for slave1 add user

sudo addgroup hadoop sudo adduser -ingroup hadoop hadoop sudo vim ./etc/sudoers add:hadoop ALL=(ALL:ALL) ALL

Host&hostname

sudo vim ./etc/hosts add: 192.168.1.40 master 192.168.1.41 slave1 sudo vim ./etc/hostname cubieboard -->slave1

==Static IP settings= For each node

sudo vim ./etc/network/interfaces add #auto lo # iface lo inet loopback #iface lo eth0 dhcp auto eth0 iface eth0 inet static address 192.168.1.40 gateway 192.168.1.1 netmask 255.255.255.0 network 192.168.1.0 broadcast 192.168.1.255 sudo vim ./etc/resolvconf/resolv.conf.d/base add this: nameserver 192.168.1.1

Ssh sever

slaves and master to achieve mutual login without password

Master

ssh-keygen –t rsa –P '' cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys chmod 600 ~/.ssh/authorized_keys ssh localhost scp ~/.ssh/id_rsa.pub hadoop@192.168.1.40:~/

Slaves

mkdir ~/.ssh chmod 700 ~/.ssh cat ~/id_rsa.pub >> ~/.ssh/authorized_keys chmod 600 ~/.ssh/authorized_keys rm –r ~/id_rsa.pub

JDK

JDK path modification

#export JAVA_HOME=/lib/jdk

Hadoop configuration

Hadoop configuration parameters

You should edit core-site.xml hdfs-site.xml mapred-site.xml on /hadoop/hadoop_0.20.203_master/conf

core-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>fs.default.name</name> <value>hdfs://master:9000</value> </property> </configuration>

hdfs-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.name.dir</name> <value>/usr/local/hadoop/datalog1,/usr/local/hadoop/datalog2</value> </property> <property> <name>dfs.data.dir</name> <value>/usr/local/hadoop/data1,/usr/local/hadoop/data2</value> </property> <property> <name>dfs.replication</name> <value>2</value> </property> </configuration>

mapred-site.xml

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>mapred.job.tracker</name> <value>master:9001</value> </property> </configuration>

7.How to run

bin/hadoop namenode -format bin/hadoop dfsadmin -report bin/start-all.sh // start bin/stop-all.sh // stop ./bin/hadoop jar hadoop-examples-0.20.203.0.jar pi 100 100 //Calculates PI